My Maria ... DB

Thursday, September 26, 2013 at 12:31PM

Thursday, September 26, 2013 at 12:31PM

You set my soul free like a ship sailing on the sea

~ "My Maria" - BW Stevenson, (later Brooks & Dunn)

As a former Oracle employee I was pleased today to see the last of the stirring comeback for Oracle Team USA, and thrilled to see some footage shot from 101 California St. in San Francisco by former co-workers at Booz Allen. Faulkner was right: "The past is never dead. It's not even past."

The best part of that past world was working for Chris Russell and meeting with Larry Ellison every Friday (3:00PM or whenever Larry showed up to 5:00PM) to go over our progress with the hosted Oracle offering Oracle Business OnLine. Chris is a fantastic manager and a great person -- we got BOL (as it was called) rolling, and I was thrilled just coming out of our first couple of Larry-meetings with the feeling: "He liked it! We didn't get fired!" Little secret: Larry was a better manager than he ever gets credit for, and/but he is magnificently competitive!

Oracle is a terrific database, but when Oracle acquired Sun Microsystems they also acquired the previously-acquired-by-Sun MySQL database. MySQL is a nice open-source database, and was at the core of Ruby on Rails development efforts practically back to RoR 1.0 back in 2006. Rails has long since broken that direct linkage, but it was a nice luxury to tie in MySQL and get ActiveRecord object-relational management for free. I've missed MySQL, and there have been varied worries that MySQL would be the red-headed stepchild in the Oracle household, but now Maria has stepped in to take our worries away.

"Maria" in this case is MariaDB. As wikipedia notes "MariaDB is a community-developed fork of the MySQL relational database management system, the impetus being the community maintenance of its free status under the GNU GPL." Having a good, trusted, universal SQL database was a terrific luxury, and that sounds enough like the old MySQL battle cry, so let's get started.

The first thing we'll want to do is clear out any installations or vestiges of mySQL that currently exist on our servers. For the MariaDB example, I'm going to clear out both my development machine (a MacBook Pro running OSX 10.8.5) and my target machine (a Linux box running Ubuntu 13_04 Raring Ringtail). Tom Keur offers an nice example here: How to remove MySQL completely Mac OS X Leopard

$ sudo rm /usr/local/mysql

$ sudo rm -rf /usr/local/mysql*

$ sudo rm -rf /Library/StartupItems/MySQLCOM

$ sudo rm -rf /Library/PreferencePanes/My*

$ sudo rm -rf /Library/Receipts/mysql*

$ sudo rm -rf /Library/Receipts/MySQL*

$ sudo rm /etc/my.cnf

That clears out the Mac version, and on Ubuntu a simple...

$ sudo apt-get remove mysql

... should do the trick. Now we'll install MariaDB. For work on a Macintosh we can let Homebrew do most of the work for us. As MariaDB is a drop-in replacement for mysql, once we have it we can have it install itself:

$ brew install mariadb

$ unset TMPDIR

$ mysql_install_db

$ cp /usr/local/Cellar/mariadb/5.5.32/homebrew.mxcl.mariadb.plist \

~/Library/LaunchAgents/homebrew.mxcl.mariadb.plist

The final plist copy ensures that MariaDB starts up whenever we boot the Macintosh. It's just a little more work on Ubuntu:

$ sudo apt-get install mariadb-server

$ sudo apt-get install libmariadbd-dev

The second call to install libmariadb-dev is something we'll need to install the mysql2 gem on Ubuntu. With our database installed, we'll now install the mysql2 gem to be our database adaptor.

$ sudo gem install --no-rdoc --no-ri mysql2 -- \

--with-mysql-dir=$(brew --prefix mariadb) \

--with-mysql-config=$(brew --prefix mariadb)/bin/mysql_config

The commands to make this work on Ubuntu will be familiar to any Linux sysadmin:

sudo /etc/init.d/mysql start

sudo /etc/init.d/mysql stop

Now that the installation is complete, lets try a nice standard Rails app to confirm that our DB and adaptor are working properly. Let's create a new Rails app and add in the necessary components to run it. Since I've just updated my development Mac to Apple's new "Mavericks" OSX 10.9 release, let's call our app Mavericks, put in on mySQL, and add in the web server 'thin' and

$ rails new mavericks -d mysql

$ cd mavericks

$ gem install thin

Fetching: eventmachine-1.0.3.gem (100%)

Building native extensions. This could take a while...

Successfully installed eventmachine-1.0.3

Fetching: daemons-1.1.9.gem (100%)

Successfully installed daemons-1.1.9

Fetching: thin-1.6.0.gem (100%)

Building native extensions. This could take a while...

Successfully installed thin-1.6.0

3 gems installed

Now the final step is to create a test mavericks_development database, and of course we'll add thin to our Gemfile. We'll want our first MariaDB app to do a bit, so lets give it a controller to show us some pages. Here's the command to generate our controller 'pages', and to stub in 'index' and 'about' methods:

$ rails generate controller pages index about

We'll create some "Hello World" text in our pages_controller:

and pass that code to be displayed in our index.html.erb file:

Once we've added these in we can fire the Mavericks application up:

<post> $ rails server

We'll go to the web url of our pages' index page, and here we are:

YAY! Rails is up and running, with our mySQL replacement MariaDB under the hood. Let's take a look at the Rails Environment, and we can confirm that all is well with our mysql adapter as well.

Victory! It's not quite an America's Cup regatta triumph, but we've got a nice defensibly-open database under our application and we can roll forward Oraclelessly from here. But who cares, really? Oracle mySQL goes back to the future with MariaDB, but where does that take us from here?

The answer is: it's all about architecture.

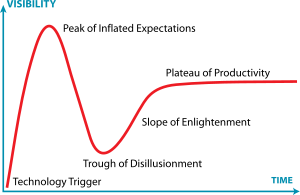

As Proust said, "The real voyage of discovery consists not in seeking new landscapes, but in having new eyes." Once upon a time the IT landscape was all mainframe-based, and no one got fired for buying IBM. Then came the minicomputer and PC ages, both (as DEC's disappearance and Microsoft's present travails show us) replaced by the web. But the web isn't the leading edge of systems design anymore. With MariaDB we've reopened the data layer and in my next posts I'll explore the soul of these new machines -- web enabled and handheld, event-driven and big-data ready. The right architecture will be the DeLorean for our trip into the new millennium of computer services.

John Repko | Comments Off |

John Repko | Comments Off |